Can AI Replace a Human Adviser?

Artificial intelligence (AI) tools like ChatGPT are increasingly capable of analysing financial data, generating reports, and answering complex questions in seconds. But the real question is: can AI truly replace a human financial adviser?

In this series, ‘Can AI Replace a Human Adviser?’, we examine case studies of clients navigating major life transitions and put AI to the test against a Providend Client Adviser. As these stories unfold, we reveal what AI gets right, what it gets dangerously wrong, and why human advice may still matter more than you think.

Case Study: Retirement and Career Switch

Bob, 45, and his wife Elsie, also 45, have two children — a 10-year-old son and an 8-year-old daughter. They plan for one child to study at a local university and the other overseas. The couple earns a combined annual income of $200,000, saves about $80,000 a year, and holds $1.5 million in investible assets ($1 million in Singapore shares and $500,000 in bank savings). They also own a fully paid-up condo valued at $1.6 million.

Their goal is to retire at 60 while maintaining their current standard of living and potentially leaving a modest legacy for their children. With an average risk tolerance, they are comfortable with some market fluctuations but prefer not to take unnecessary risks.

Recently, however, both have begun to feel the strain of two decades in demanding corporate roles. They are now seriously considering switching to lower-stress, lower-paying jobs — even if it means saving less before retirement. Their key question is: “If we move to less stressful jobs now but still plan to retire at 60, can we still afford the retirement lifestyle we want?”

To find out, we posed Bob and Elsie’s exact situation to ChatGPT and asked it to act as their financial adviser. Our Client Adviser, Jit Hwee, reviewed the AI-generated plan, analysed its conclusions, and compared them against what a real human adviser would do. Here is what he found.

What ChatGPT Did Well

When we first ran Bob and Elsie’s numbers through ChatGPT, I must admit that its structure is impressive. In seconds, it could:

- Analyse their current financial position

- Estimate their retirement expenses

- Account for inflation, investment returns and university costs

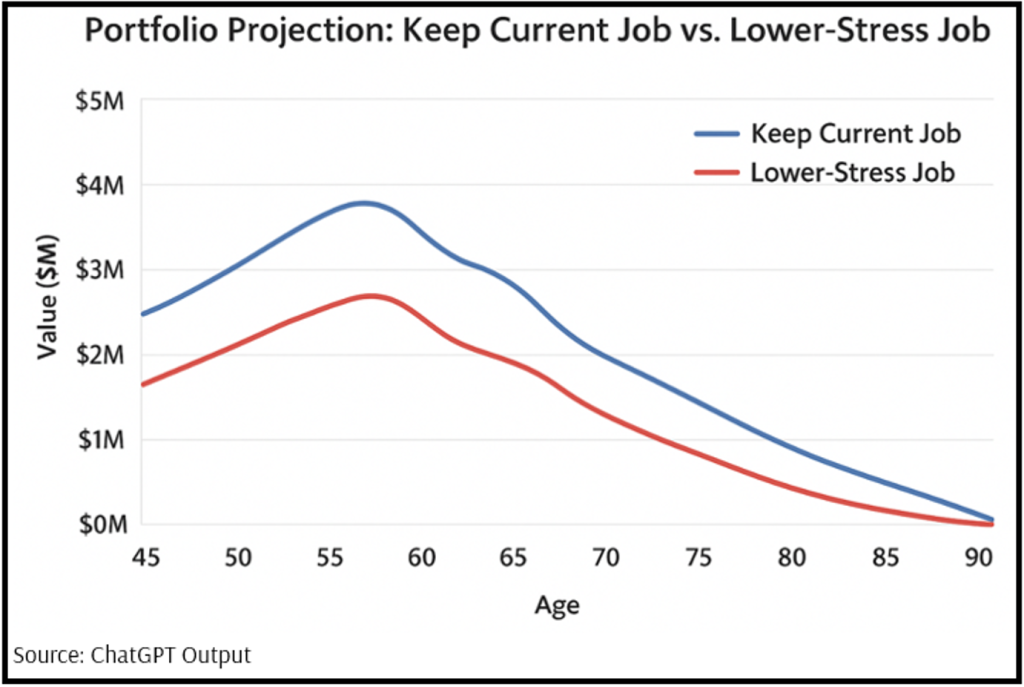

- Compare two scenarios — keeping their current jobs or switching to lower-stress roles

- Instantly regenerate projections when asked to extend their plan from age 90 to 95

Based on these calculations, ChatGPT concluded that if they stayed in their current jobs, they could retire comfortably at their desired lifestyle. If they switched to lower-stress, lower-paying jobs, they might have to retire a little later or adjust spending slightly, but they would still be ‘be fine’.

From a purely analytical standpoint, it was fast, clear, and logical. It produced in seconds what a human adviser might normally take hours to compute.

But as I looked through the output, what became clear is this: While AI can process numbers, it cannot understand the complexity in people and their changing needs.

The Limitations Beneath the Surface

When we examined ChatGPT’s analysis more closely, several limitations emerged that would make a significant difference in a person’s real life!

1. Incomplete Data and Assumptions (It did not ask the questions I would naturally ask my clients)

Because ChatGPT work with what is typed in, it did not pause to ask clarifying questions — the kind an experienced adviser would instinctively ask to avoid making wrong assumptions. As a result:

- Key Singapore-specific realities like CPF contributions and National Service timelines were ignored, and these are crucial information to any wealth plan.

- Insurance — the foundation of any comprehensive wealth plan — acting primarily as a key risk mitigation to protect assets from unforeseen events and ensuring the plan remains intact, was entirely missing from the conversation. ChatGPT did not highlight nor obtain the relevant information from the couple.

- The model assumed a 4% investment return before and after retirement, which may not be realistic, and which was not explained. In general, investment rate of return should be lower during retirement due to de-risking of overall portfolio.

- It did not try to find out the breakdown of the Singapore stocks holdings which could provide insights relating to idiosyncratic risk, concentration risk and home country biasness.

- It did not question why the couple held $500,000 in cash — something a human adviser would explore as it often reveals deeper attitudes toward risk or past financial trauma

These are the kinds of details humans would naturally probe, because we know numbers alone never tell the full story.

2. Lack of Context and Emotional Understanding

When Bob and Elsie said, “Can we afford to slow down?”, they were not simply asking a math question. They were asking a life question, or what we term as an ikigai question at Providend. Questions about burnout, health, time with their children, and what truly matters in this stage of life, were not brought up.

This emotional context is invisible to AI. Sure, it can calculate spending, but it cannot understand stress. It can plot inflation, but it cannot sense when someone is feeling overwhelmed.

A large part of real advisory work happens not in the numbers, but in the conversations between us as Client Advisers, and the client along with their families.

3. Information Overload Without Guidance

ChatGPT produced pages of calculations and charts. For the average person, it would be difficult to interpret or even know which parts truly mattered, and to generate effective follow-up prompts to ChatGPT. A human adviser does not just present information — we explain, prioritise, simplify, and walk through decisions step by step for our clients, wherever they are at: someone with financial training or an average person with no interest in personal finance.

4. Unverified Accuracy (It made errors that it did not even know were errors)

AI can sound confident but still be wrong. It uses generic models and publicly available information and may not check reliability of sources or local context. With major life decisions, that uncertainty is highly risky.

In this case, the following errors and inaccuracies surfaced:

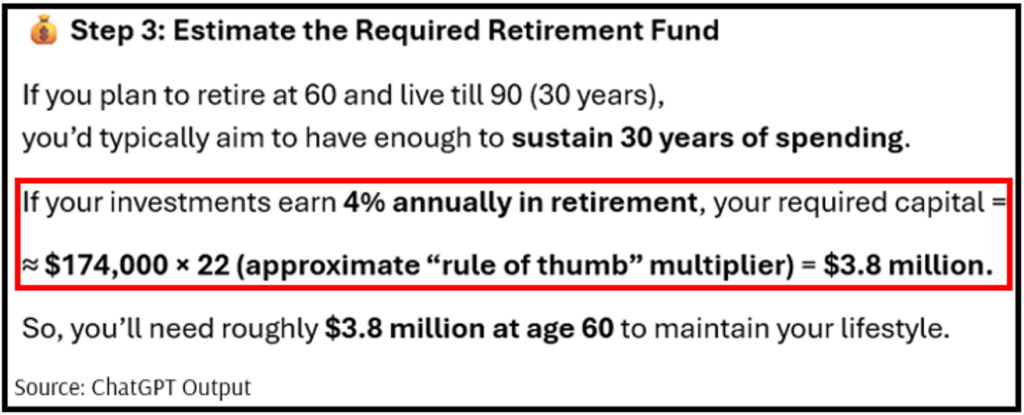

A) Incorrect Computation of Required Capital

- Incorrect number was generated. Required capital should be $4.35 million instead of $3.8 million based on planning parameters of 4% return and inflation-adjusted spending of $174,000 starting from 2040 ($120,000 in today’s value).

- This could be resulting from inaccurate association of the 4% return with the use of a 22X multiplier. The use of a 22X multiplier to give a required capital of $3,800,000 would require a 5.1% return.

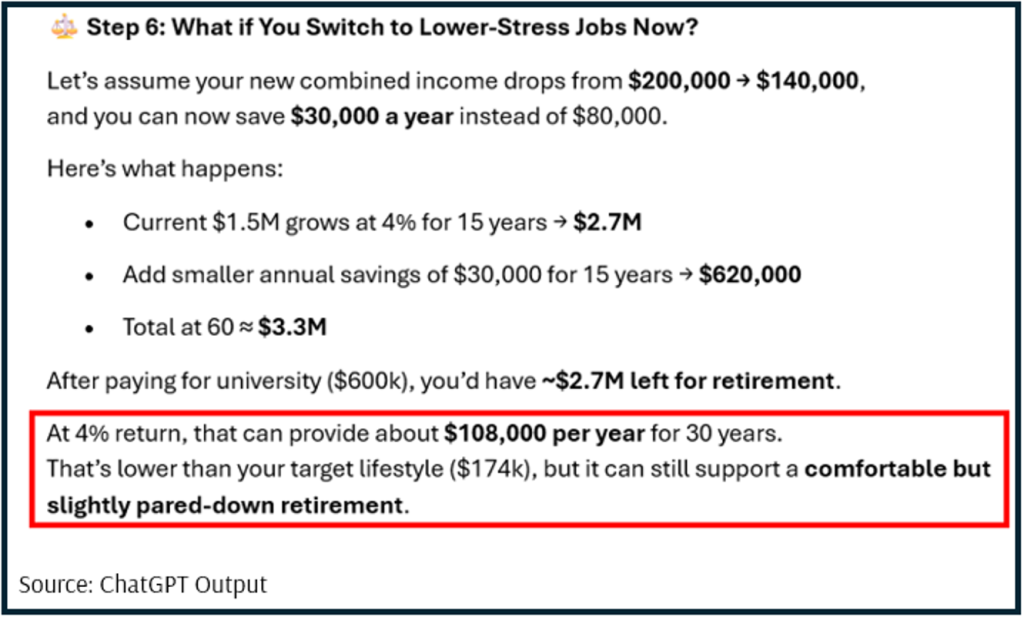

B) Incorrect Conclusion

- Retirement income of $108,000 per year represents only 62% of the target lifestyle of $174,000!

- Yet, ChatGPT made a fundamental misjudgement in calling this a lower amount than the target lifestyle and a fatally flawed recommendation that it can still support a comfortable but slightly pared-down retirement.

C) Inaccurate Charts

- Generated charts are manifestly inaccurate and inconsistent with the descriptions which are generally accurate.

In short: ChatGPT offered information, not advice. It answered the question, but not the why behind it. In addition, there is high risk of ChatGPT providing the wrong information.

These are not cosmetic issues — they are fundamental errors that could mislead someone making life-changing decisions!

The Heart of It: Numbers Are the Easy Part

When Bob and Elsie asked, “Can we afford to slow down?”, they were not asking me for a percentage, a graph, or a complex simulation.

Their real questions were:

- “Will our kids be okay?”

- “What happens if one of us falls ill?”

- “Are we being irresponsible by wanting rest?”

AI cannot hear the hesitation in someone’s voice. It cannot sense the relief when you tell a client they are safe or highlight a potential issue before it becomes one. It cannot tell when someone is smiling politely but deeply anxious underneath. But as a human adviser, this is the part of the job that is really important, having the deep conversations with our clients.

The Value of a Human Adviser — A Lifelong Conversation

At Providend, we believe good financial planning is not just about running the right numbers. It is about understanding the person sitting across from us — their hopes, fears, blind spots, and the quiet things they do not always know how to articulate.

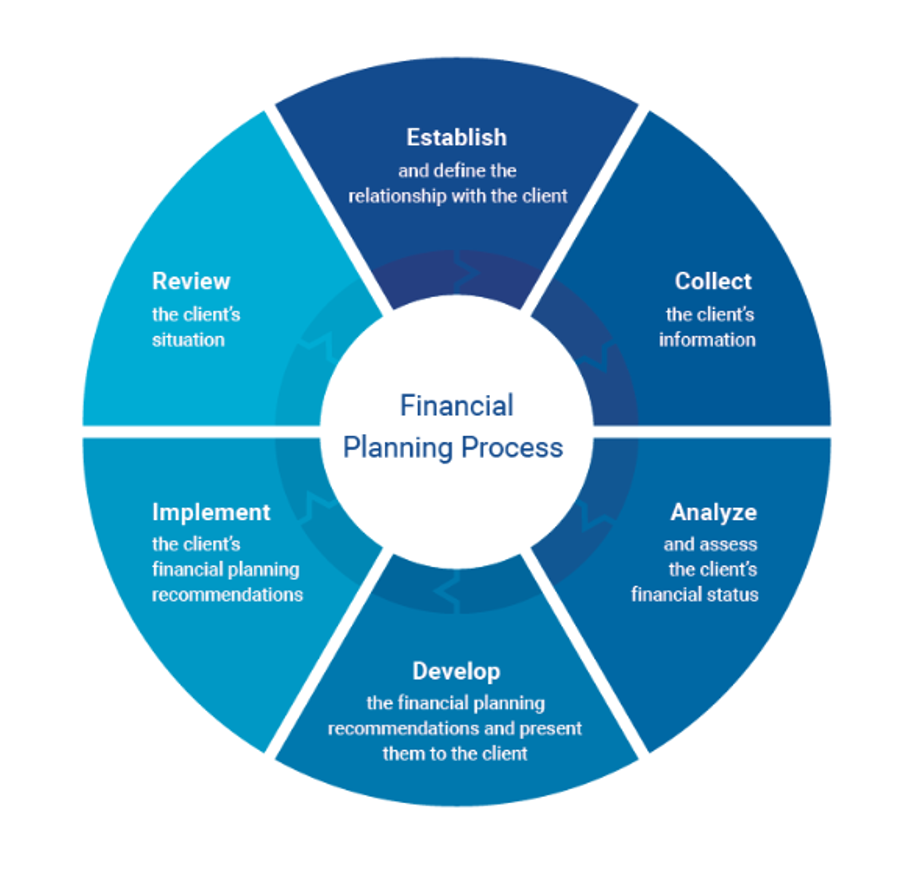

As advisers, we adapt the six-step financial planning process defined by the Financial Planning Standards Board (FPSB)— a globally recognised framework that ensures holistic, values-based advice. It gives us structure, but the real work happens in the deep conversations, and the moments when clients feel supported.

Source: Financial Planning Standards Board (FPSB)

Step 1: Establish and Define the Relationship

Before any calculations, I spend time helping clients understand what our Client and Client Adviser relationship is really about. This is where trust begins, when they realise my role is not to sell a product, but to walk with them through every decision ahead. As Providend is a fee-only wealth advisory firm, our clients can be assured that our advice is always aligned with their best interest.

Step 2: Identify Your Goals and Priorities

Before running any projections, we help clients define their non-negotiable or ikigai goals — what truly matters to them and their family. Is it financial independence? Time for children? Health and peace of mind? These goals become the compass for all decisions that follow.

In my experience, clients naturally think in terms of money first, but through conversation, we uncover the life priorities that really drive their decisions. This is why we always say at Providend: in order to live a good life, we must first make a life decision before making wealth decisions.

Step 3: Gather Complete and Accurate Information

We conduct a comprehensive Fact Find, gathering details of income, expenses, assets, liabilities, CPF balances, and insurance coverage. This ensures accuracy — something AI may miss.

In Singapore, factors like CPF, NS obligations, and property rules significantly affect outcomes. If clients forget certain details or assume these are minor, they might be in for a huge surprise of how big a difference it can make. As a human adviser, I notice these gaps and would probe to ensure nothing important is overlooked. Without these ‘local details’, the wealth plan simply is not reliable.

Step 4: Analyse and Evaluate Your Financial Status

With accurate data, I would assess my client’s financial health — cash flow, net worth, risk exposure, and ability to meet their goals.

Unlike AI, we review assumptions together, present “what-if” scenarios, and incorporate client feedback. I guide clients to ask the right questions, help them understand trade-offs, and make sense of complex information. The goal is not just to deliver numbers but to give clients clarity and confidence in their decisions. Often times, this may be done over several meetings.

Step 5: Develop and Present a Holistic Plan

We consolidate everything into a clear, comprehensive Wealth Management Plan — one that integrates investments, insurance, CPF, retirement, children’s education, mortgage, and estate considerations.

The plan is designed to be intuitive and actionable, reflecting both the client’s financial situation and their personal values.

Step 6: Implement and Review the Plan Continuously

Finally, we act as accountability partners — helping clients take action, stay on track, and adapt to change.

I have regular engagements with clients to advise:

-

New government policy changes (CPF, tax, housing),

-

Implications of life events and personal milestones (career changes, children’s education, ageing parents),

In my experience, this ongoing engagement is where human advisers provide real value. We notice subtle changes in priorities or circumstances and adjust plans accordingly where relevant to do so — something AI cannot do.

How I Would Advise Bob Personally

If I were advising Bob and Elsie, I would go beyond running projections and stress-testing numbers. I would:

-

Explore their lifestyle trade-offs – Discuss what “lower-stress jobs” really mean for them emotionally and financially, and what compromises they are willing to make.

-

Highlight hidden risks – Review insurance coverage, health contingencies, and CPF assumptions to ensure their retirement plan is resilient.

-

Translate numbers into real-life scenarios – Show them how lifestyle changes today would affect retirement travel, education plans for their children, or ability to leave a legacy.

-

Guide them through the emotional decisions – Pause to listen when they are anxious about income reduction or stress, and help them weigh non-financial benefits like health, time with family, and peace of mind.

-

Create a living roadmap – Develop a plan that is flexible and adaptive, with checkpoints to reassess their situation as life changes.

The above may take several weeks or months of planning, but eventually, Bob and Elsie would have a much clearer picture of their next steps and do not need to worry about making any potential missteps.

The Bottom Line: Humans and AI Together

AI can process data, but it cannot discern meaning. It does not know when your goals have shifted, when stress has affected your priorities, or when family needs have changed. It cannot interpret the why behind the numbers.

It cannot sense when a client is exhausted.

It cannot hear the hesitation in their voice when they ask, “Are we going to be okay?”

That is where a human adviser adds irreplaceable value — by combining technical expertise with empathy and accountability.

At Providend, we use technology to make our analysis more efficient. But the heart of our work remains human: understanding your story and helping you live it well.

Five Key Takeaways

-

AI can assist, but it cannot advise.

ChatGPT can calculate, but it cannot understand your motivations or values — and its calculations may be inaccurate. -

You need financial literacy to use AI meaningfully.

Without the right knowledge, users may misinterpret or over-rely on incomplete or inaccurate outputs. -

Information overload is real.

AI’s lengthy reports can overwhelm those without time or technical background. -

Local context matters.

AI misses Singapore-specific realities — CPF, NS, tax, and property rules — leading to inaccurate conclusions. -

True advice requires empathy and partnership.

A Providend Client Adviser offers not just a plan, but accountability, wisdom, and ongoing guidance through every life transition.

It Is About Life, Not Just Money

ChatGPT can generate information.

A human adviser helps you make decisions that honour your life’s purpose.

At Providend, that is what holistic wealth planning means — using money as a tool to help you live meaningfully, confidently, and with peace of mind.

Because the best advice does not just shape your finances.

It shapes your life.

This is an original article written by Foo Jit Hwee, Client Adviser at Providend, the first fee-only wealth advisory firm in Southeast Asia and a leading wealth advisory firm in Asia.

For more related resources, check out:

1. How to Make Life Decisions (Ikigai Decisions)

2. To Live the Good Life, Make Life Decision First Before Wealth Decisions

3. Here’s Why We Charge a Higher Fee Than Robos

To receive first-hand wealth insights from our team of experts, we invite you to subscribe to our weekly newsletter.

Through deep conversations with our advisers, you will gain clarity on what matters most in life and what needs to be done to live a good life, both financially and non-financially.

We do not charge a fee at the first consultation meeting. If you would like an honest second opinion on your current investment portfolio, financial and/or retirement plan, make an appointment with us today.